What is in those Containers?

Baby Steps in Understanding Containerization

Junior Dev:

It's working on my laptop. I don't know why it's not working on the server.

Team Lead:

OK. Let's deploy your laptop.

Have you heard of containerization? The team lead's sarcastic response is just a lay explanation of what it means.

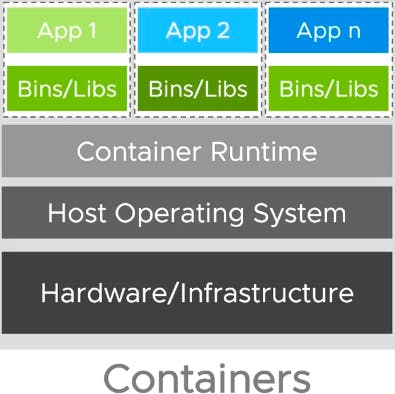

It's like a shipping container being used as a standard way to package a collection of goods together into a ship, so also are virtual containers. It's an approach to provisioning an application (with its configuration and dependencies) as one logical package. It abstracts the application from the host's hardware, environments, and resources.

This makes it possible for the packages to be moved around from desktop to desktop perhaps, cloud to cloud, without dependency or configuration issues. Also, the packaging isolates the application from other applications running on the host. Moreso, there is no limit to how many applications can be run by the packaging on a single host.

Here comes Docker

According to TechBeacon:

Docker is the most standard way of packaging applications as containers. It extends a common container format called Linux Containers (LXC), with a high-level API that provides a lightweight virtualization solution that runs processes with access to resources (CPU, memory, I/O, network, and so on) in isolation and doesn't require starting any virtual machines.

The crux of the matter is Docker depends on two main Linux kernel features - namespaces and control groups (cgroups) for process isolation and resource utilization management.

The namespaces help to completely limit what an application can view and this includes the operating environment, process trees, file systems, etc., while the cgroups help to completely limit what resources an application can utilize and this includes the CPU, memory, and I/O.

So what is a container?

According to the Docker documenation:

A container is simply another process on your machine that has been isolated from all other processes on the host machine. That isolation leverages kernel namespaces and cgroups.

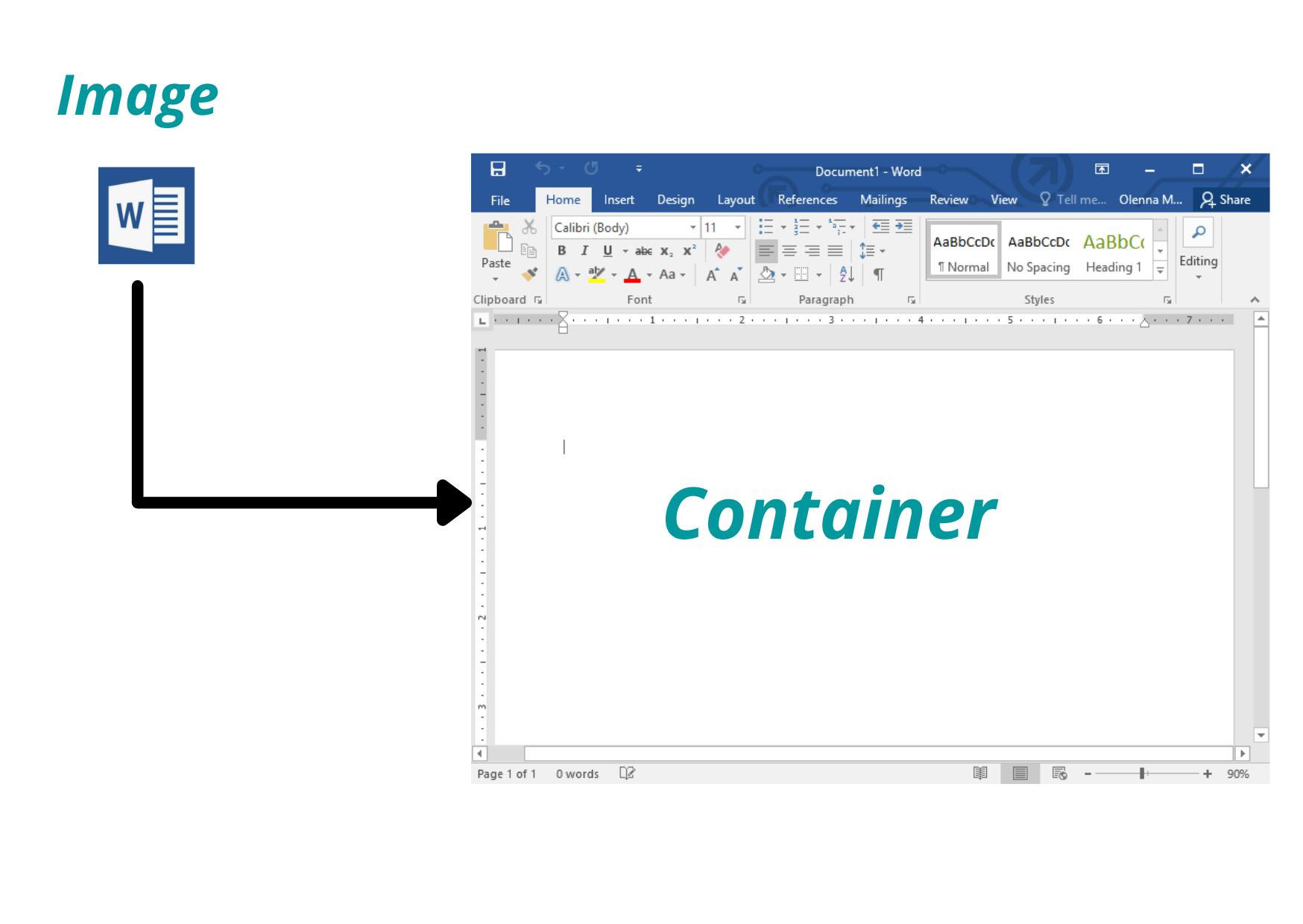

For you to have a container it has to be built from an image.

What is an image?

According to the Docker documenation:

An image includes everything you need to run an application - the code or binary, runtime, dependencies, and any other file system objects required.

So what's the difference?

On the desktop of your computer, you have an app icon that launches say, MS Word.

In this parlance, the icon is like the image while the running instance of MS Word is the container.

In this parlance, the icon is like the image while the running instance of MS Word is the container.

You get, right?

As you can have several instances of MS Word running so also you can have several instances of a containerized app from an image running a single host machine.

So what we really do need to know is how to build a Docker Image.

Ok, Cool. Let's go ahead

How do we build a Docker Image?

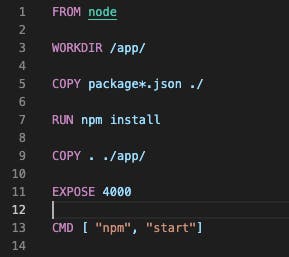

Firstly, we would need a Dockerfile to specify a Docker image. The Dockerfile is a file format used to describe how to build and run a Docker image. This is a sample Dockerfile below used to build a nodejs containerized application.

Understanding a Dockerfile

Understanding a Dockerfile is fairly easy. Let's take it line by line.

FROM node

The above line tells Docker what base image you would like to use for your application. In this case, the image is built from a nodejs image.

WORKDIR /usr/src/app

This instructs Docker to create a working directory /app/ and use this path as the default location for executing the following instructions.

COPY package*.json ./

We need Docker to copy our package.json and package.lock.json files to our working directory in the Docker image.

RUN npm install

This line tells Docker to install the dependencies required to run our application.

COPY . .

We need to copy our source code to the docker image and the line above makes that happen. It takes all the files located in the current directory on our local machine and copies them into the current directory of the image.

EXPOSE 4000

At this point, we need a port to connect to the image from the outside world.

CMD [ "npm", "start"]

Finally, the last line tells Docker how to run the app in our image.

Building an Image

We would use the docker build command from our command line interface. The tag gives it a name for easy reference.

$ docker build --tag node-app .

Running an Image

After we creating the image, next is to run it with docker run command.

$ docker run --publish 4000:4000 node-app

The publish option enables us to expose the port from our host computer to the container port.

Conclusion

The issue in the scenario above may have been a result of setup or environmental differences of the developers in the team. Docker's value comes from setting standards across development and deployment environments and this includes specific settings, environments and even versions of resources.

Credits

The essential guide to software containers for application development